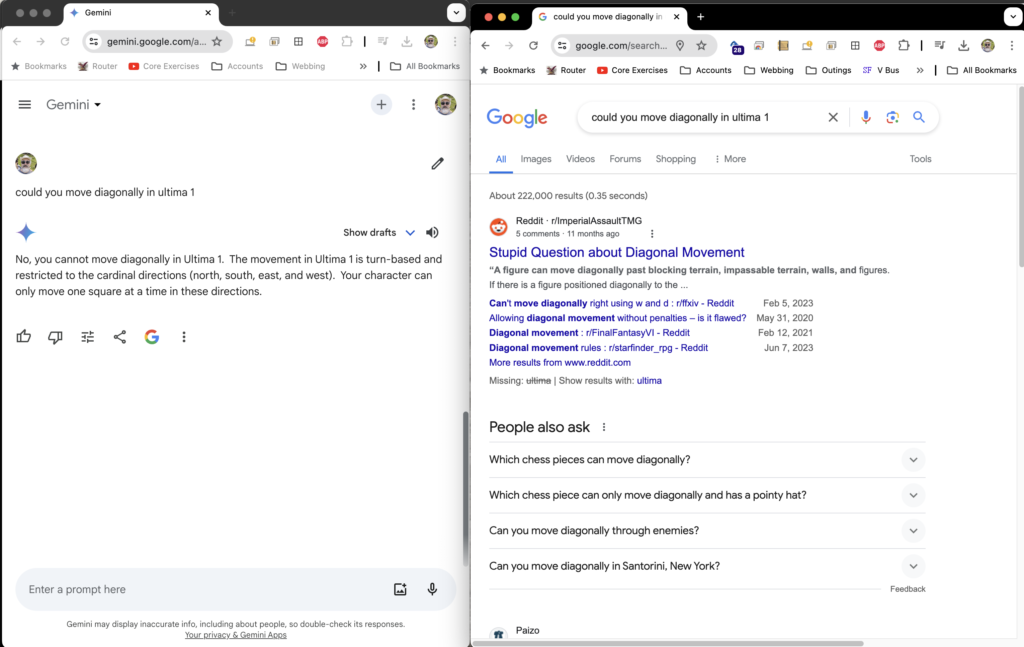

I think we're at peak LLM usefulness here. A succinct answer I'm willing to trust, no ads, nothing seeking my further engagement. Basically I can't see a way for Gemini to get any better for this type of dead-end query than where it's at right now. Prepare thyself for the crapwave.

This is a great video of Lex Fridman interviewing Max Tegmark about AI and Moloch and Consciousness. It's a really long one. Maybe watch it in 15-minute increments. I had it open as a browser tab forever, half-way watched, but finished it a few nights ago. I may revisit it, as it's pretty dense and there are a shit-tonne of ideas in there.

"I kind of love programming. It's an act of creation. You have an idea, you design it, and you bring it to life, and it does something." - Lex Fridman - at about 1:35:00. He mourns the loss of some form of "innocence". He struggles a bit with how to articulate the feeling of loss in the face of LLMs that can now code. Lex, I feel you, but...

In and of themselves, advanced LLMs do not rob creators of a pride in their art. If you're feeling a loss of "specialness", then it most likely because you mixed some ego into it. Ego is everywhere. This blog post and the way I'm posturing the language in it is mostly about ego, to make it seem like I'm "in the know" when in reality I'm just as clueless as the next person.

And while it is definitely surprising that things are moving so fast, it's not incredibly surprising to me that a model trained on the entire sum of human output up to this point can do a lot of "our special human things" so well.

On the other hand, LLMs may indeed rob creators of the ability to make a living at their art because of greed.

Greed is what bothers me the most about this whole AI Hype Cycle. Speaking of posturing, we keep hearing about how AI will benefit humanity, and I believe that it can and will. Curing disease, eliminating the slave labor class, and becoming a multi-planetary civilization seem like really important goals. But no one can possibly believe that is what is driving the research. The prime motivator is making money. Expand the profit margins of the big corporations by enabling them to do more while paying fewer humans to do it. Or grow startups so they can become big corporations or get acquired by big corporations.

The fact that we can all see this and yet do nothing different is the term "Moloch" that Max speaks about, that apparently was an ancient Carthaginian demon, but is a nice label to identify why things get shitty [1] [2] mixed with the prisoner's dilemma and the race to the bottom. I still have to read the full extent of this Scott Alexander piece, but you can get the gist from the video.

The insane push to always show growth and be more profitable than the last time. Where does it come from?

Humans are an intelligence that is constantly trying to optimize for happiness (at some biological level, literally the neurochemicals floating around in our brains giving us sensations). Long ago we equated happiness with money because it gives us the ability to pursue whatever we want. Seems logical, if sad.

Corporations as a form of intelligence is another weird thing briefly talked about in the video (1:17:00) and mentioned in their first discussion, which I found very interesting. By that argument, governments fit into the same bucket.

A corporation is an outcome of humans trying to acquire more money with less risk, it does this by being a construct that can live and operate larger than any single human can and operates with a huge amount of resources. The example Max gives is of a tobacco company CEO deciding one day that the company should stop making cigarettes - an act which would only result in that human being replaced as CEO.

A corporation, as an entity, is constantly trying to optimize for profit. Originally set up to serve society, the roles have been reversed and society is largely serving the institutions (the video also touches on how the really big corporations have captured the regulators).

Today's AI require huge amounts of compute power and data to be trained and operate - basically meaning corporations are the ones that can run the powerful ones. I wonder how long it will take for the roles to reverse such that the corporations are serving the AI.

One thought experiment: If today's AI can be turned off by killing power to a data center or deleting a model, how would an advanced AI make it impossible for this to happen? Would it be to couple the AI services to mega corporation profits such that turning off the AI means the death of the corporation? I'm not saying that huge LLMs are consciously scheming in this direction, but it certainly seems like we are pointing in a direction where giant companies naturally are serving this.

Humans gave birth to corporations and corporations are giving birth to the next wave of intelligence.